The previous analysis understands the PCM and YUV data of the audio and video raw data generated by encapsulation, decoding, and decoding. Today, let's talk about the inverse operation of decapsulation: encapsulation .

What is audio and video encapsulation?

In fact, it is easy to understand that audio and video encapsulation is actually a packaging of audio and video streams, plus additional information for later use.

For example, for the pcm data mentioned above, if you want to play it, you must provide parameters such as sampling rate, number of channels, and sampling format, otherwise it cannot be played. And if it is a packaged audio file, such as an mp3 file, you can directly enter the file name to play.

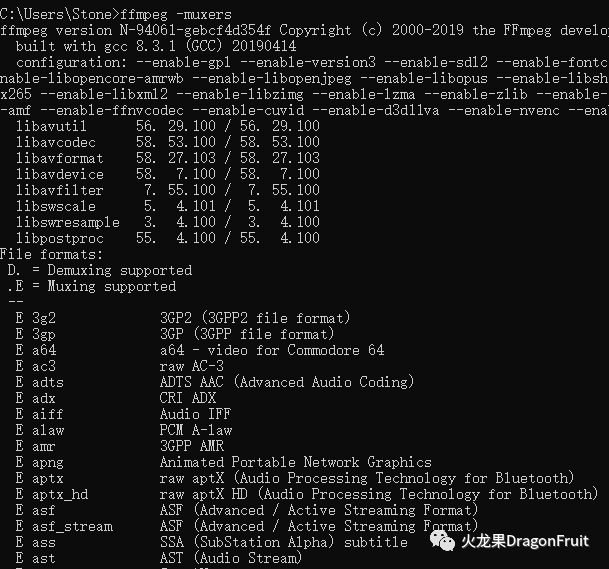

Package format muxer supported by FFmpeg:

-

Command Line:

ffmpeg-muxers

-

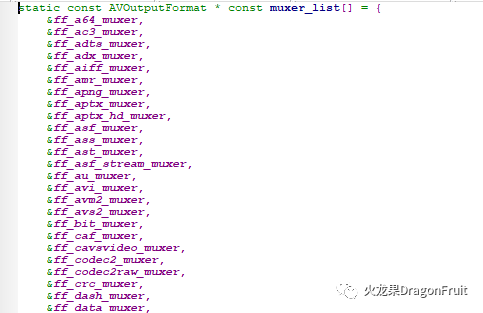

Source code:

muxer_list.c: array of muxer_list pointers

The structure corresponding to mxer: AVOutputFormat

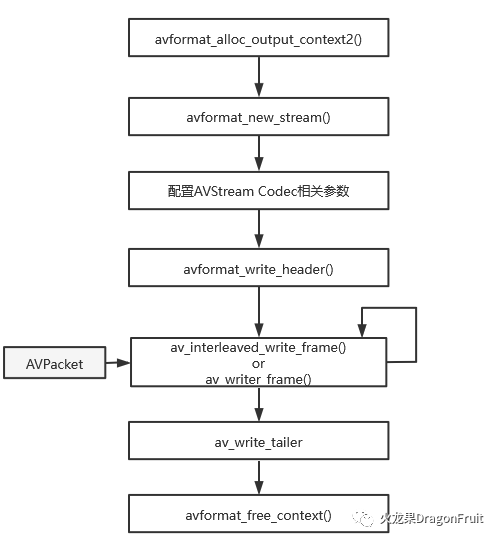

The basic process of packaging:

-

Assign output AVFormatContext:

int avformat_alloc_output_context2(AVFormatContext **ctx, ff_const59 AVOutputFormat *oformat,const char *format_name, const char *filename);

-

Add a data stream AVStream for the input file:

AVStream represents an audio stream or video stream or subtitles

AVStream *avformat_new_stream(AVFormatContext *s, const AVCodec *c)-

Configure AVStream code related parameters:

Set the AVCodecParamters parameter of AVStream;

-

Write to file header :

int avformat_write_header(AVFormatContext *s, AVDictionary **options);This function will call the write_header method of the corresponding Muxer.

-

Write data to the output file :

av_interleaved_write_frame or av_write_frame

The difference between the two is that the former caches and rearranges the input AVPacket to ensure that the AVPacket output to the file is incremented by dts. The latter is not cached and needs to be rearranged by the caller itself.

-

Write to end of file:

int av_write_trailer(AVFormatContext *s);This function calls the muxer's writer_tailer method.

-

Release the AVFormatContext:

void avformat_free_context(AVFormatContext *s)Note: The prefix for writing the file header API is avformat_, while writing the data and file tail is av_.

Official Demo Analysis :

remuxing.c:

In this example, the input file is decapsulated first, and then repackaged directly, without decoding and encoding operations.

The previous article on the decapsulation operation has been introduced, and only the encapsulation part is analyzed here.

int main(int argc, char **argv){AVOutputFormat *ofmt = NULL;AVFormatContext *ifmt_ctx = NULL, *ofmt_ctx = NULL;AVPacket pkt;const char *in_filename, *out_filename;int ret, i;int stream_index = 0;int *stream_mapping = NULL;int stream_mapping_size = 0;if (argc < 3) {printf("usage: %s input output\n""API example program to remux a media file with libavformat and libavcodec.\n""The output format is guessed according to the file extension.\n""\n", argv[0]);return 1;}in_filename = argv[1];out_filename = argv[2];if ((ret = avformat_open_input(&ifmt_ctx, in_filename, 0, 0)) < 0) {fprintf(stderr, "Could not open input file '%s'", in_filename);goto end;}if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {fprintf(stderr, "Failed to retrieve input stream information");goto end;}av_dump_format(ifmt_ctx, 0, in_filename, 0);//分配输出的AVFormatContext上下方avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, out_filename);if (!ofmt_ctx) {fprintf(stderr, "Could not create output context\n");ret = AVERROR_UNKNOWN;goto end;}stream_mapping_size = ifmt_ctx->nb_streams;stream_mapping = av_mallocz_array(stream_mapping_size, sizeof(*stream_mapping));if (!stream_mapping) {ret = AVERROR(ENOMEM);goto end;}ofmt = ofmt_ctx->oformat;for (i = 0; i < ifmt_ctx->nb_streams; i++) {AVStream *out_stream;AVStream *in_stream = ifmt_ctx->streams[i];AVCodecParameters *in_codecpar = in_stream->codecpar;if (in_codecpar->codec_type != AVMEDIA_TYPE_AUDIO &&in_codecpar->codec_type != AVMEDIA_TYPE_VIDEO &&in_codecpar->codec_type != AVMEDIA_TYPE_SUBTITLE) {stream_mapping[i] = -1;continue;}stream_mapping[i] = stream_index++;//为输出添加音视频流AVStremout_stream = avformat_new_stream(ofmt_ctx, NULL);if (!out_stream) {fprintf(stderr, "Failed allocating output stream\n");ret = AVERROR_UNKNOWN;goto end;}//配置AVStream的AVCodecParameters,这里直接用的输入文件相应流的参数ret = avcodec_parameters_copy(out_stream->codecpar, in_codecpar);if (ret < 0) {fprintf(stderr, "Failed to copy codec parameters\n");goto end;}out_stream->codecpar->codec_tag = 0;}av_dump_format(ofmt_ctx, 0, out_filename, 1);if (!(ofmt->flags & AVFMT_NOFILE)) {ret = avio_open(&ofmt_ctx->pb, out_filename, AVIO_FLAG_WRITE);if (ret < 0) {fprintf(stderr, "Could not open output file '%s'", out_filename);goto end;}}//写入文件头ret = avformat_write_header(ofmt_ctx, NULL);if (ret < 0) {fprintf(stderr, "Error occurred when opening output file\n");goto end;}while (1) {AVStream *in_stream, *out_stream;ret = av_read_frame(ifmt_ctx, &pkt);if (ret < 0)break;in_stream = ifmt_ctx->streams[pkt.stream_index];if (pkt.stream_index >= stream_mapping_size ||stream_mapping[pkt.stream_index] < 0) {av_packet_unref(&pkt);continue;}pkt.stream_index = stream_mapping[pkt.stream_index];out_stream = ofmt_ctx->streams[pkt.stream_index];log_packet(ifmt_ctx, &pkt, "in");/* copy packet */pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);pkt.pos = -1;log_packet(ofmt_ctx, &pkt, "out");//写入AVPacket数据ret = av_interleaved_write_frame(ofmt_ctx, &pkt);if (ret < 0) {fprintf(stderr, "Error muxing packet\n");break;}av_packet_unref(&pkt);}//写入文件尾av_write_trailer(ofmt_ctx);end:avformat_close_input(&ifmt_ctx);/* close output */if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))avio_closep(&ofmt_ctx->pb);//释放输出AVFormatContext上下文。avformat_free_context(ofmt_ctx);av_freep(&stream_mapping);if (ret < 0 && ret != AVERROR_EOF) {fprintf(stderr, "Error occurred: %s\n", av_err2str(ret));return 1;}return 0;}

A small question :

In the above official example, the audio data and the video data are written crosswise. If the video is written first, and then the audio is written, is it possible?

A colleague once asked this question, and he said that there is no problem with the verification, and the audio and video are synchronized. If you search on the Internet, there are also articles that have been tested and said that it is possible.

Is that really okay?

In fact, this is related to the encapsulation format. In some formats, the audio and video data are interleaved. For example, there will be problems with the TS stream. If the audio and video data are stored separately, such as mp4, there is no problem.

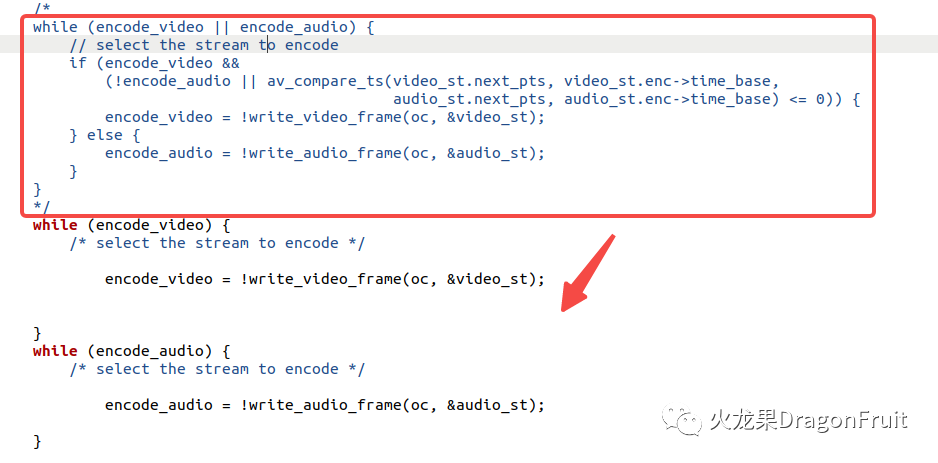

The tool can be verified by modifying muxing.c in the ffmpeg source code:

First, change the pet definition STEADM_duration from 10.0 to 1000.0. This value represents the total duration of the audio and video data written.

Then modify the original logic to write video first, and then write audio, as shown below:

The red box represents the original logic, and the following two while loops are used to write video and audio respectively.

Execute the program twice to output the ts stream and mp4 respectively, and then use ffplay to verify the playback:

The mp4 playback is normal, but the ts stream playback only has pictures and no sound.