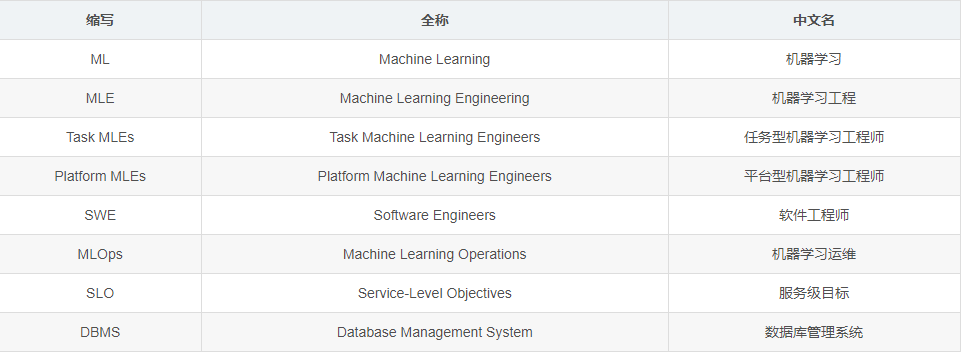

Before the article begins, let's take a look at the terminology:

Automating the end-to -end machine learning (ML) lifecycle, even for a specific prediction task, is neither easy nor straightforward. People have been talking about machine learning engineering (MLE) being a subset of software engineering, or should be treated as such. But for the past 15 months as a PhD student, I've been thinking about MLE through a data engineering lens.

Task-based machine learning engineer

There are two types of business-critical machine learning engineers (MLEs). The first are Task Machine Learning Engineers (Task MLEs), who are responsible for maintaining a specific ML pipeline (or a small set of ML pipelines) in production. They focus on specific models for critical business tasks. They are the ones who get called when the top line indicator goes down and are charged with "fixing" something. They are the ones most likely to tell you when a model was last retrained, how it was evaluated, etc.

Task MLEs have too much to do. Data scientists prototype models and come up with functional ideas, while Task MLEs need to “productionize” them. This requires writing pipelines that turn data into model inputs, train and retrain the model, evaluate the model, and output the predictions somewhere. Then, Task MLEs need to be responsible for deployment, which usually requires a staged process including testing, go-live, etc. Task MLEs then need to do "monitoring" to quickly diagnose and respond to ML-related errors.

I used to be a Task MLE. I did all these things and it was terrible. When I left the company for my Ph.D. and job handover, it took me about a month to create detailed notes detailing how the end-to-end ML lifecycle worked. When writing documentation, I often wonder why I do this at the time, such as why when the model is retrained, the training set is automatically refreshed, but the evaluation set remains the same, requiring me to manually refresh it? I've never wanted to be scientifically lax, but I've often encountered experimental code in my own model development process where the assumptions made during model training didn't hold up during the evaluation phase, let alone after deployment went live.

Sometimes I get so scientific that the business loses money. I automate a hyperparameter tuning procedure that splits the training and validation sets into many folds based on time (multi-fold cross-validation) and picks out the hyperparameters that perform best on average across all sets. I only realized after the fact how stupid this was. I should choose the hyperparameters that produce the best model in the latest evaluation set.

I've done enough research on Production ML now to know that simple overfitting to the latest data and constant retraining is a useful little trick to improve the performance of the model. Successful companies do this.

I'm confused when people say that small companies can't retrain every day because they don't have the budgets of giants like FAANG (ie Facebook, Amazon, Apple, Netflix, Google). Retraining many xgboost or scikit-learn models can cost a few dollars at most. Most models are not large language models. I learned this roundabout way while doing ML monitoring research. I've asked Task MLEs at many small companies if and how they monitor distribution shifts in their pipelines, and most of them mention hourly, daily, or weekly retraining schedules.

"I know this doesn't really solve the data drift problem," a Task MLE once told me coyly, as if they had confessed a secret they were afraid to write in a performance review.

"Has the model's performance picked up?", I asked.

"Well, yes..." he said in a long voice.

"Then it's addressing the data drift problem," I say matter-of-factly, with an inflated self-confidence as a researcher.

Anecdotes like this really upset me. I guess it's because of what I thought were important and interesting questions, and now there's nothing but interesting left. The researchers considered distribution shift to be very important, but model performance issues stemming from natural distribution shift suddenly disappeared after retraining.

I'm having a hard time finding useful facts in data drift, the actual problem. It seems obvious now: Data and engineering issues—cases of sudden drift—triggered model performance degradation. It is possible that yesterday's job of generating the contextual features failed, so we can only use the old contextual features. It's possible that a data source was corrupted, so a bunch of features became null. It could be this way, it could be that way, but the end result is the same: the data looks different, the model is underperforming, and business metrics suffer.

Platform Machine Learning Engineer

Now feels like a good time to introduce a second type of machine learning engineer: Platform MLEs, who help Task MLEs automate the tedious parts of their jobs. Platform MLEs build pipelines (including models) that support multiple tasks, while Task MLEs solve specific tasks. This is similar to, in the software engineer world, building infrastructure versus building software on top of infrastructure. But I call them Platform MLEs, not Platform SWEs, because I don't think it's possible to fully automate ML without knowing enough about ML.

The need for Platform MLEs materializes when an organization has more than one ML pipeline. Some examples of the differences between Platform MLEs and Task MLEs include the following:

-

Platform MLEs are responsible for creating function pipelines; Task MLEs are responsible for using function pipelines;

-

Platform MLEs are responsible for training the framework of the model; Task MLEs are responsible for writing the corresponding configuration files for the model architecture and retraining plan;

-

Platform MLEs are responsible for triggering ML performance degradation alerts; Task MLEs are responsible for taking action on the alerts.

Task MLEs aren't just for companies that aspire to FAANG scale. They typically exist in any company with more than one ML task. This is why, I think, Machine Learning Operations (MLOps) is currently speculated to be a very lucrative segment. Every ML company needs features, monitoring, observability, and more. But for Platform MLEs, it is easier to build most of the services yourself: (1) write a pipeline that refreshes the feature table daily; (2) use normalized logs across all ML tools; (3) save versions of datasets information. Ironically, MLOps startups are trying to replace Platform MLEs with paid services, but they are asking Platform MLEs to integrate those services into their companies.

As a bystander, some MLOps companies tried to sell services to Task MLEs, but Task MLEs were too busy looking after the models to go through the sales cycle. Looking after a model is a tough job that requires a lot of focus and attention. I hope this situation will change in the future.

At the moment, the Platform MLEs responsibilities that appeal to me most are monitoring and debugging sudden data drift issues. Platform MLEs have the limitation that they cannot change anything related to the model (including inputs or outputs), but they are responsible for finding out when and how they are broken. State-of-the-art solutions monitor the coverage (i.e. missing parts) and distribution of individual features (i.e. inputs) and model outputs over time. This is called data validation. Platform MLEs trigger alerts when these changes exceed a certain threshold (for example, a 25% drop in coverage).

Data validation achieves huge recall. I'd wager that at least 95% of sudden drifts, mostly caused by engineering issues, are caught by data validation alerts. However, its precision is poor (I bet less than 20% for most tasks) and it requires a Task MLEs to enumerate all features and output thresholds. In practice, precision may be lower because Task MLEs suffer from alarm fatigue and silence most alarms.

Can we trade off precision for recall? The answer is no, because high recall is the whole point of a surveillance system. To catch bugs.

Do you have to monitor every function and output? No, but alerts need to be at the level of a column, otherwise they will be inoperable for Task MLEs. Saying that PCA component #4 drifts by 0.1 for example is useless.

Can you ignore these alerts by triggering a retrain? No, there is no value in retraining on invalid data.

For a while, I thought data validation was a proxy for the monitoring of ML metrics like accuracy, precision, recall. Due to the lack of true labels for the data, ML metrics monitoring is almost impossible to do in real-time. Many organizations only get tags on a weekly or monthly basis, both of which are too slow. Also, not all data has labels. So all that's left to monitor is the input and output of the model, which is why everyone monitors input and output.

I couldn't be more wrong. Assuming Task MLEs have the ability to monitor real-time ML metrics, data validation is still useful at this point for the following reasons:

-

First, models for different tasks can be read from the same features. Multiple Task MLEs can benefit if a single Platform MLEs can correctly trigger a feature corruption alert.

-

Second, in the era of modern data stacks, model features and outputs (i.e., feature storage) are often used by data analysts, so data accuracy needs to be guaranteed. I once ran through a bunch of queries in Snowflake without realizing that half of an age-related column was negative, and shamelessly introduced this information to a CEO.

I've learned that it's okay to make this kind of mistake. Big data can help you tell any story you want, right or wrong. The only thing that matters is that you hold fast to your incorrect opinions; otherwise people will doubt your abilities. No one else will review your techniques for using Pandas data frames in Untitled1.ipynb. They won't know you've screwed up.

I hope the above paragraph is a lie. I'm only half kidding.

other thoughts

The need for assurance of the quality of ML data and models (i.e. Service Level Objectives, or SLOs for short) brought me to the heart of my first year of research: the role of the MLE, whether task-based or platform-based, to ensure this SLO satisfied. This reminds me of data engineering more than any other role. Simply put, data engineers are responsible for exposing data to other employees; ML engineers are responsible for ensuring that this data and its accompanying applications (such as ML models) are not garbage.

I think a lot about what it means to have good model quality. I hate the word quality. It's a vaguely defined term, but the reality is that every organization has a different definition. Many people think that quality means "not stale", or ensuring that the feature generation pipeline runs successfully every time. It's a good start, but maybe we should do better.

Through the data SLO perspective, data validation is a successful concept because it explicitly defines the quality of each model input and output in a binary fashion. Either at least half of the features are missing, or none. Either age is a positive number or it is not. Either the record conforms to a predefined pattern, or it doesn't. Either meet the SLO or not.

Assume that each organization can clearly define their data and model quality SLOs. In an ML environment, where should we validate data? Traditionally, data-centric rules were enforced by the DBMS. In his paper introducing the Postgres database, Stonebraker succinctly explained the need for the database to enforce rules: Rules are difficult to enforce at the application layer because applications often need to access more data than transactions need. For example, the paper mentions a database of employees whose rules are that Joe and Fred need to have the same salary; rather than having the application query Joe and Fred's salary and assert equality each time Joe or Fred's salary is needed, it is better to use the data enforced in the manager.

A year ago, my mentor told me the phrase "Constraints and Triggers are needed to keep ML pipelines healthy", and I remembered it even though I didn't fully understand what it meant. As a pre-Task MLE, I think that means using code to log mean, median, and aggregation of various inputs and outputs, and throwing errors when data validation checks fail, which is what I do at work .

Now that I have more experience with Platform MLEs, I don't think Task MLEs should do these things. Platform MLEs have data management capabilities, while Task MLEs have application capabilities or are responsible for the downstream part of the ML pipeline. Platform MLEs should enforce rules (e.g. data validation) in the feature table so that Task MLEs are alerted for any errors when querying. Platform MLEs should execute triggers like the various ad-hoc post-processing Task MLEs do on forecasts before presenting them to customers.

I also thought a lot about how to make it easier for people to understand "model quality". An organizationally specific definition of model quality helps explain why ML companies have their own Production ML frameworks (eg TFX), some open source and some closed. Many new frameworks are emerging as part of MLOps startups.

I used to think that the reason people wouldn't switch to a new framework was that rewriting all the plumbing code would be cumbersome, that's true, but the webdev ecosystem is a counter-example: if people get the benefit, they rewrite the code. The only difference is that current ML pipeline frameworks are rarely self-contained and cannot be easily plugged into various data management backends.

The ML pipeline framework needs to be tightly coupled with the DBMS that understands the ML workload, the DBMS needs to know what kind of triggers Task MLEs want, understand the data validation and tune alerts to have good precision and recall, and guarantee some scalability sex. Maybe that's why many of the people I've been talking to lately seem to be turning to Vertex AI (a database-like service that can do anything).

It feels weird to do research on all of this. It's not like many of my friends' Ph.D.s, where I'm supposed to ask a series of scientific questions and run a bunch of experiments to come to a conclusion. My PhD thesis feels more like an exploration where I study how data management works, become a historian of the craft, and try to make a point about how it will play out in the MLE ecosystem. It feels grounded theory, and I'm constantly updating my views based on new information I learn.

To be honest, it's uncomfortable to feel like I'm changing my mind so frequently; I don't know exactly how to describe it. A person close to me told me that this is the nature of research. We don't know all the answers, let alone the questions, in the first place, but we'll develop a process to discover them. However, for the sake of my sanity, I'm looking forward to when I change my mind about Production ML less.

— Recommended reading —

" New Programmer 001-004 " has been fully listed

Scan the QR code below or click to subscribe now